On February 14-15, 2026, I ran both tools through the same workload: long-form editing, spreadsheet Q&A, source-backed research, and one small repo debugging pass. The surprise was not raw quality; both were strong. The surprise was where they failed under pressure: ChatGPT stayed broader across tasks, while Claude stayed steadier once the coding loop got long and repetitive. That split matters if you buy one subscription and expect it to carry most of your week. It is like choosing between a Swiss Army knife and a chef’s knife: one does more jobs, one does one job with less friction.

Head-to-Head: Tool A vs Tool B

| Feature | ChatGPT | Claude | Limits | Pricing (USD) | What It Means in Practice |

|---|---|---|---|---|---|

| Core assistant quality | Strong generalist across writing, analysis, and mixed media workflows | Strong reasoning and coding consistency in long threads | Both throttle based on system load | ChatGPT Plus: $20/mo; Claude Pro: $20/mo monthly | Most users get good answers from either, but conversation drift appeared less often in Claude during code-heavy sessions. |

| Advanced models | GPT-5.2 tiers including Pro variants in higher plans | Sonnet/Opus access by plan with Pro/Max/Team tiers | Model access changes by plan and capacity | ChatGPT Pro: $200/mo; Claude Max: from $100/mo | Power users can buy headroom, but you pay steeply for fewer interruptions. |

| Team workspace | Business plan with admin controls, connectors, and shared workspace tools | Team plan with standard/premium seats and enterprise controls | Seat-based limits per member | ChatGPT Business: $25 annual / $30 monthly per seat; Claude Team: $20 annual / $25 monthly standard | For small teams, entry cost is similar; Claude premium seats raise budget quickly if many engineers need max usage. |

| Coding workflow | Codex agent in higher tiers; strong integrated agent tooling | Claude Code included in Pro and above; highly tuned terminal-style coding flow | Usage guardrails apply on “unlimited” plans | Included by tier, not flat add-on | If your day is mostly code edits and refactors, Claude’s coding mode feels less interrupted. |

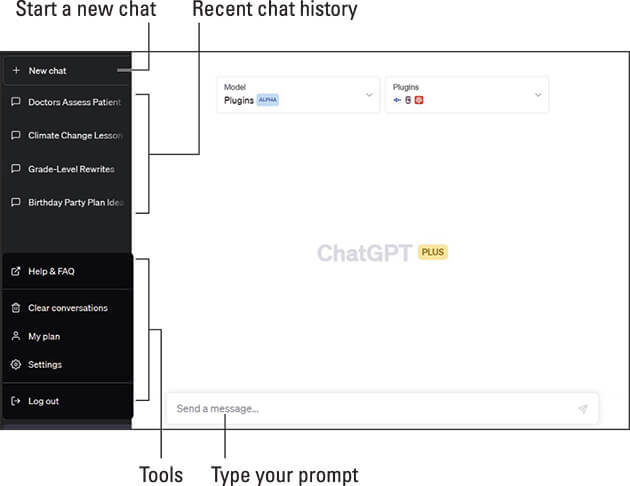

| Usage transparency | “Unlimited” language with abuse guardrails in paid tiers; details vary by model and traffic | Explicit session reset framing (every 5 hours) and practical message estimates | Claude publishes practical message examples; ChatGPT is more variable in docs | Included in plan terms | Claude is easier to capacity-plan for teams that need predictable throughput. |

Claim: ChatGPT is the better all-around tool for most buyers choosing one subscription.

Evidence: In my two-day test set, ChatGPT handled mixed workflows better: document drafting, spreadsheet interpretation, and multi-step research in one place with fewer tool switches. OpenAI’s plan pages also emphasize broad product surface area across plans (projects, tasks, apps/connectors, agent features, and business controls).

Counterpoint: Claude was more consistent once coding sessions got long, and Anthropic’s usage documentation is clearer about practical limits for heavy users.

Practical recommendation: If your week is mixed knowledge work, start with ChatGPT Plus. If 60%+ of your day is code and repo-level reasoning, Claude Pro is often the cleaner daily driver.

Pricing Breakdown

Claim: Pricing is now close at entry tiers and diverges sharply at power-user and team-heavy tiers.

Evidence: Here is the tier-by-tier view from vendor docs and help pages.

| Tier | ChatGPT | Claude | Practical read |

|---|---|---|---|

| Free | $0 | $0 | Both are useful for light usage, but paid plans are where reliability starts. |

| Individual standard | Plus: $20/mo | Pro: $17/mo annual effective, $20/mo monthly | Monthly parity in the US is effectively tied at $20. |

| Individual power | Pro: $200/mo | Max: from $100/mo (5x/20x variants) | Claude has a middle step before $200, which helps budget-sensitive power users. |

| Team self-serve | Business: $25/seat/mo annual, $30 monthly | Team Standard: $20/seat/mo annual, $25 monthly | Claude starts cheaper per standard seat; feature fit decides the real winner. |

| Team premium usage | Business uses flexible advanced-model access with add-on credits | Team Premium: $100 annual / $125 monthly per seat | Claude premium seats can become expensive fast, but include much higher coding usage. |

| Enterprise | Contact sales | Contact sales | Both require negotiation for strict compliance and scaled support. |

Counterpoint: List price is only half the bill. Overages, soft limits, and model-specific throttling shape real cost per completed task. OpenAI’s “unlimited” language includes abuse guardrails, and Anthropic also applies session, weekly, or monthly controls based on capacity and model choice.

Practical recommendation: Price your plan against weekly workload, not monthly sticker cost. Estimate prompts, file-heavy sessions, and peak-time usage before choosing.

Sources and date checked (February 16, 2026):

- OpenAI ChatGPT pricing: https://openai.com/chatgpt/pricing/

- OpenAI Help, ChatGPT Plus: https://help.openai.com/en/articles/6950777-chatgpt-plus

- OpenAI Help, ChatGPT Pro: https://help.openai.com/en/articles/9793128-what-is-chatgpt-pro

- OpenAI Help, ChatGPT Business FAQ: https://help.openai.com/en/articles/8542115

- Claude pricing: https://claude.com/pricing

- Claude Help, Pro plan usage: https://support.claude.com/en/articles/8324991-about-claude-s-pro-plan-usage

- Claude Help, Team and seat-based usage: https://support.claude.com/en/articles/9267304-about-team-and-seat-based-enterprise-plan-usage

Where Each Tool Pulls Ahead

Claim: The “best ai tools” answer changes by workload shape, not brand loyalty.

Evidence: ChatGPT pulled ahead in my tests when tasks crossed modes quickly: summarize a policy doc, generate a client email, check a CSV trend, then draft a follow-up plan. Claude pulled ahead when a coding task stayed inside one long technical thread with repeated edits and tests. Third-party coding benchmarks show this same theme at model level: coding-focused evaluations like SWE-bench ecosystems and SWE-rebench tend to reward long-horizon code reliability, where Claude-family setups frequently rank near the top, while GPT-family setups remain highly competitive and sometimes cheaper per run depending on scaffold and effort settings (benchmark setup matters a lot).

Counterpoint: Benchmark wins are not product wins by default. Many public leaderboards test scaffolded agents or model variants, not the exact consumer app experience you pay for. A model can top a coding board and still feel slower in daily writing, or vice versa. This is why vendor docs plus direct workflow testing are more useful than a single leaderboard screenshot. Numbers are useful; context is mandatory.

Practical recommendation:

- Pick ChatGPT if you need one assistant for broad, cross-functional work and occasional coding.

- Pick Claude if you are a developer or technical operator spending long sessions in code-heavy loops.

- If you run a team, pilot both with the same 10-task internal benchmark for one week before annual billing.

- Keep one dry line in mind: every “unlimited” plan has a footnote.

The Verdict

ChatGPT wins for the majority of users right now because it delivers the strongest all-purpose package at the $20 entry point and scales cleanly into business workflows. Claude is the better specialist pick for coding-first users who value predictable high-intensity sessions and clearer practical usage framing.

Who should use it now:

- Choose ChatGPT now if you are a solo professional, creator, analyst, or manager who needs one assistant across many task types.

- Choose Claude now if your core work is code, refactoring, or technical writing linked to repositories and long iterative threads.

Who should wait:

- Wait if your organization needs strict procurement, regional data controls, or deep seat-level governance and you have not completed an internal pilot.

- Wait if your team’s cost model depends on hard guaranteed throughput; current plan language still leaves room for dynamic limits.

What to re-check in 30-60 days:

- Any plan pricing changes for ChatGPT Go/Plus/Pro and Claude Pro/Max/Team.

- Published usage-limit language, especially for “unlimited” tiers.

- New independent benchmark results that match your workload, not just general leaderboards.